Ideology, experts, and Dan Kahan

Published by Skeptikos on Apr 15, 2015

Dan Kahan and his colleagues released a new study. It's on one of my favorite topics— motivated reasoning.

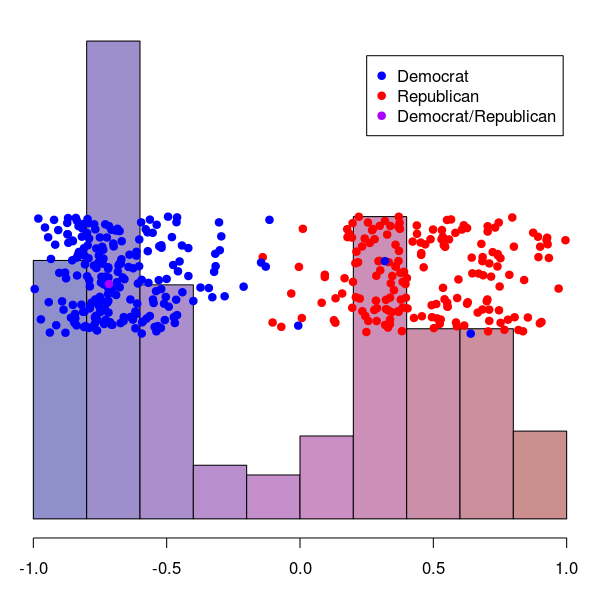

In the paper, Kahan and his colleagues ran an experiment to determine whether judges let ideology influence their decisions. They recruited 253 judges, and asked them to decide hypothetical cases designed to provoke politically-motivated bias in the decisions. The outcome?: No evidence that judges are politically biased.

According to the authors, the results support the “domain-specific immunity” hypothesis, which argues that people are good at avoiding bias in their areas of expertise. And if the domain-specific immunity hypothesis is true, people should confer greater trust in other experts, specifically climatologists. Since the experimental results contradict earlier legal research, Dan Kahan also goes out of his way to describe why he believes the earlier research was wrong (in particular, research that relies on machine learning algorithms to find evidence of bias– 1, 2).

It's an interesting paper, but I don't buy the conclusions. Nor do I agree with Dan Kahan's criticism of the machine learning research.

First, the experiments: The judges didn't show any signs of political bias, but the cases they were given, one about littering and the other about disclosure of private information, don't seem all that ambiguous. If there are precedents to follow (and I'm guessing there are), these cases should be straightforward. No one should be surprised that judges hit unbiased home runs when Kahan throws them softballs. Throw ambiguous and unprecedented curveballs at them in Supreme Court conditions, and they'd probably strike out.

Second, the machine learning approaches:

Before I continue, a disclosure— I've been measuring state legislator ideology with rollcall scaling techniques for about four years. I'm working on research that draws on rollcall scaling, and I'm even giving a presentation about rollcall scaling on Monday. Computationally, rollcall scaling and machine learning are nothing alike, but the approach to ideology is similar and Kahan's criticism hits close to home. I think readers will find my arguments compelling regardless.

I'm not going to respond to Kahan's long string of arguments regarding model evaluation because his whole analysis is fundamentally misguided. It's easier to start from scratch. (Don't take it too hard. Did I mention I've been doing this for four years?)

So. You're interested in ideology, and you have a machine learning algorithm that predicts what individual Supreme Court justices will decide, more or less based on ideology. (If you're interested in predicting the outcomes of Supreme Court cases, you could aggregate all 9 individual predictions to predict the outcome. But since we're only investigating ideology, we don't care about that. Only the predictions for individual judges matter.)

Now we need a baseline model, to get a sense of how well this machine learning program performs. A person who knew nothing about judges might look at the percentage of cases where the justices vote to overturn the lower court's ruling, and use that to create a very simple model of judicial decision-making. Let's say judge A votes to overturn 70% of the time. Our naïve observer puts together his model: it says that judge A decides randomly which way to vote, with a 70% chance of voting to overturn in every case. We could say that, in every case, judge A rolls a 10-sided die. If the die shows 1-7, he votes to overturn. If the die shows 8-10, he votes not to overturn.

That's our baseline model. And we can calculate the exact likelihood that it would produce the actual votes of judge A. From basic probability, that likelihood is

0.7^# of votes to overturn × 0.3^# of votes to uphold

The baseline model does an OK job of predicting the votes. (Technically, we should treat 0.7 as a parameter, and then calculate the entire likelihood of the model with all possible parameter values. That requires calculus, but it's not bad and a computer to get the answer. Then we'd have to repeat this for every justice and multiply the results, and then repeat this for every year and multiply those results.)

Does the machine learning model do better? I suspect it does much better, but I can't prove that because the papers don't provide the necessary information. In theory, we could calculate the likelihood with the same method as above, only substituting 0.7 and 0.3 with the numbers produced by the algorithm. Dan Kahan is right to worry about evaluating models, and the authors really should provide those numbers! If a machine learning model based on ideology can't beat a stupid model based on guessing, then we have no reason to attribute votes to ideology.

But even if we can't run the calculations ourselves right now, we still have a couple of good reasons to think ideology influences Supreme Court decision-making. First is that Supreme Court justices have obvious ideological leanings and those leanings match up very suspiciously with their votes. That's an extremely improbable coincidence. Second is that these machine learning papers have been around for more than a decade, and lots of smart people have already seen them. It's possible that Dan Kahan found a large, obvious error that every other researcher missed, but it's not likely.

So are judges and experts still subject to bias in their fields of expertise? Yes, I think they are.